Introduction to Data Science Coursework Report

Section A: Business Understanding

Section E: Implementation and Business Case

· Interpretation and Explainability:

Section A: Business Understanding

Myalgic encephalomyelitis / chronic fatigue syndrome (ME/CFS) is a complicated disease syndrome that affects many aspects of the body. It is characterized by chronic fatigue, feeling unwell followed by a physical effort, and inability to think. Although it may be extremely harsh, it remains a subject of numerous doctoral disputes. Patients usually have to wait long before receiving a diagnosis and might be misclassified (Wotherspoon, 2021).

The symptoms of depression resemble ME/CFS to a large extent, as they include fatigue, low mood, weak energy, and poor functioning, and it is difficult to differentiate ME/CFS, depression and their co-occurrence in a clinical situation.

Our project will construct a supervised machine-learning classifier that is capable of making predictions about whether one is one of three types unknown, ME/CFS, Depression, or Both. The model does not intend to diagnose patients formally, it is aimed at assisting them to triage the patient in order to allow the health services to concentrate on the patients that require further evaluation. Depression symptoms can be identified with the help of, e.g., a PHQ-9, which is validated (Wang et al., 2021), whereas overall fatigue load can be predicted with the help of the Fatigue Severity Scale (Aronson et al., 2023). The model should include these steps because they would reveal high-risk patients at an earlier stage.

Misjudgments do reap actual dividends. In case the model falsely indicates that a person does not have ME/CFS, he or she may be encouraged to overly exercise, something that would aggravate their situation. An undetected case of depression may postpone mentalhealth care. These dangers imply that we would have to review the model based on the measures that consider all the three classes as being equal. We thus decided on macro-averaged precision, recall and F 1 -score.

Across the broader digital-health domain, researchers indicate that machine-learning-based solutions tend to be integrated quickly due to the individuals doubting trust, explaining, and responsibility (Landers et al., 2023). Additionally, the decision-support systems do not necessarily enhance clinical judgments of clinicians, and they may introduce variability in case they are not properly incorporated (Vasey et al., 2021). The implication of these findings is that our models must be straightforward, reliable and must comply with our individual element of application.

The studies of ME/CFS emphasize listening to patient narratives, disease marginalisation history, as well as responsible use of corresponding digital resources (Agarwal & Friedman, 2025). These ethical issues influenced our selection of models, the manner in which we tested them as well as our desire to treat all diagnostic groups equally.

Section B: Data Exploration

The data has numerical and nominal variables regarding fatigue, mood, sleep, lifestyle, and demographics. The data was loaded correctly and the variable types were found to be appropriate. There were a few missing values which were managed via imputation during the modelling process. The summary statistics indicated that the scores on PHQ-9 and fatigue showed mild values of skewness and this is typical of a data sample based on symptoms (Aronson et al., 2023; Wang et al., 2021).

· Class distribution

The diagnosis categories were moderately imbalanced

Figure 1 Class distribution of diagnosis

This pattern justifies the use of macro-metrics to ensure equal weighting of minority classes.

· Symptom distributions

PHQ-9 scores were higher in individuals labelled Depression and Both.

Figure 2 Distribution of PHQ-9 scores by diagnosis

This aligns with clinical evidence that PHQ-9 is a valid indicator of depressive symptom severity (Wang et al., 2021).

Fatigue Severity Scale values were higher in ME/CFS and Both, consistent with known disease patterns.

Figure 3 Fatigue Severity Scale scores by diagnosis

This is consistent with findings that fatigue scores strongly differentiate symptom burden in chronic health conditions (Aronson et al., 2023).

· Feature relevance

Categorical variables such as post-exertional malaise (PEM) showed strong associations with the ME/CFS class. PEM is recognised as a core symptom of ME/CFS and contributes meaningfully to group separation (Agarwal & Friedman, 2025). Lifestyle and sleep-related variables also contributed but showed weaker separation.

In general, it was established through exploration that:

- Symptom measures provide useful discriminative patterns

- Moderate class imbalance requires fair evaluation methods

- Relationships between fatigue and mood reflect clinical literature

- Both linear and non-linear patterns are present, justifying testing of both model families.

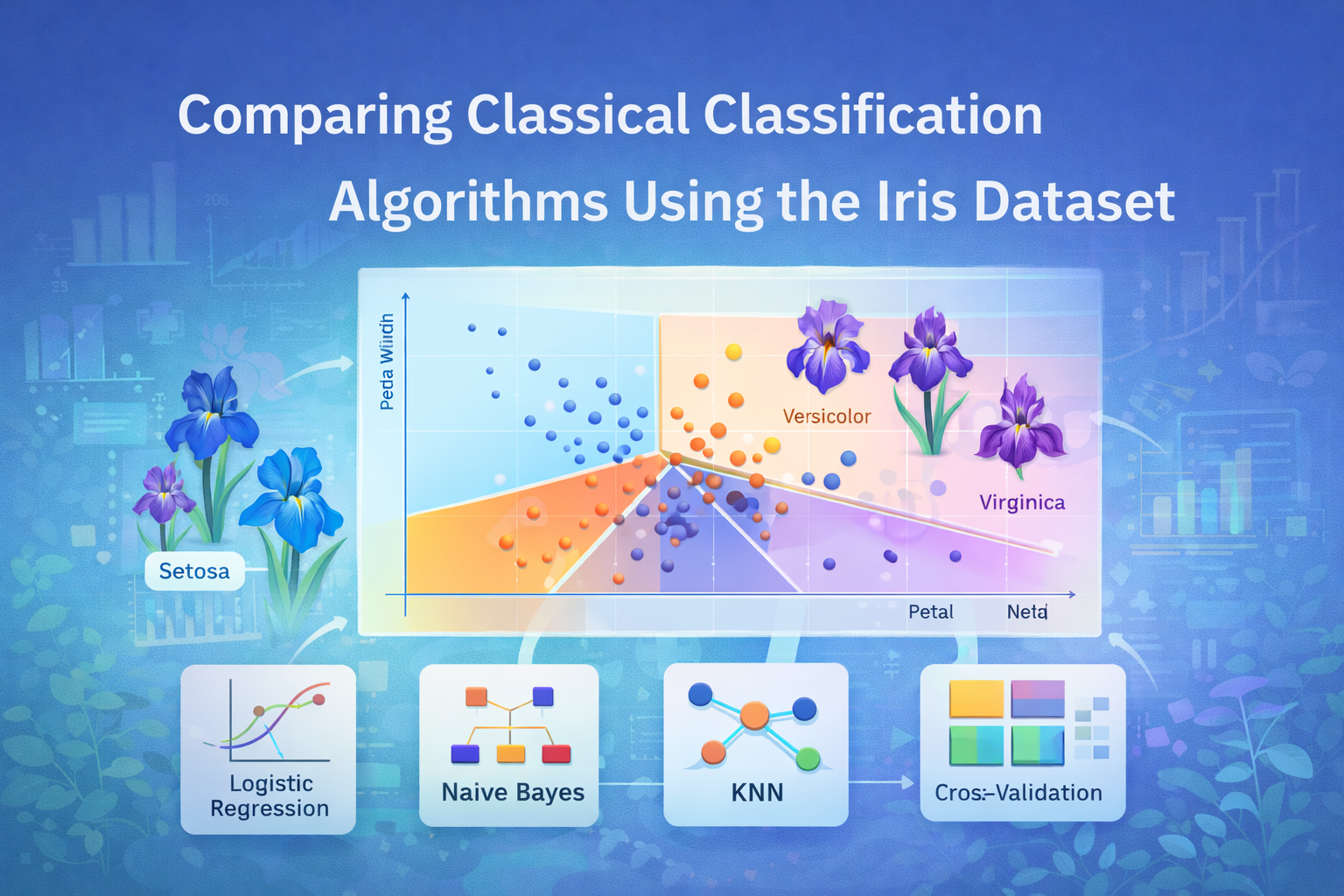

Section C: Model Building

The modelling workflow was done in the best practices. To ensure that there was no change in proportions of the classes, we divided the data into an 80/ 20 stratified split. We constructed a preprocessing pipeline with the ColumnTransformer to the extent that all models were tested in the same manner. Numeric characteristics were median-imputed and were standardised, categorical characteristics were mode-imputed and one-hot encoded.

A DummyClassifier served as a baseline, predicting the most frequent class.

Three models from the module content were selected:

- Multinomial Logistic Regression; interpretable and stable, representing linear relationships.

- Decision Tree Classifier; able to capture interactions but sensitive to overfitting.

- Random Forest Classifier; an ensemble method with strong performance in mixed-feature environments.

· Hyperparameter tuning

GridSearchCV with 5-fold stratified cross-validation was used, optimising macro F1-score. Macro F1 was chosen because:

- It treats each diagnostic category equally

- It prevents dominance by the most common class

- It aligns with fairness concerns in health-data contexts (Landers et al., 2023)

Each model was tuned using a compact grid to balance performance with computational efficiency. Logistic Regression tested regularisation strengths; the Decision Tree varied depth and minimum samples; the Random Forest tested number of trees, depth, and splitting criteria.

Cross-validation results showed that Random Forest achieved the highest macro F1, followed by Logistic Regression, with Decision Tree performing worst. This is consistent with known performance characteristics of ensemble methods in structured health datasets. Random Forest was therefore selected as the final model.

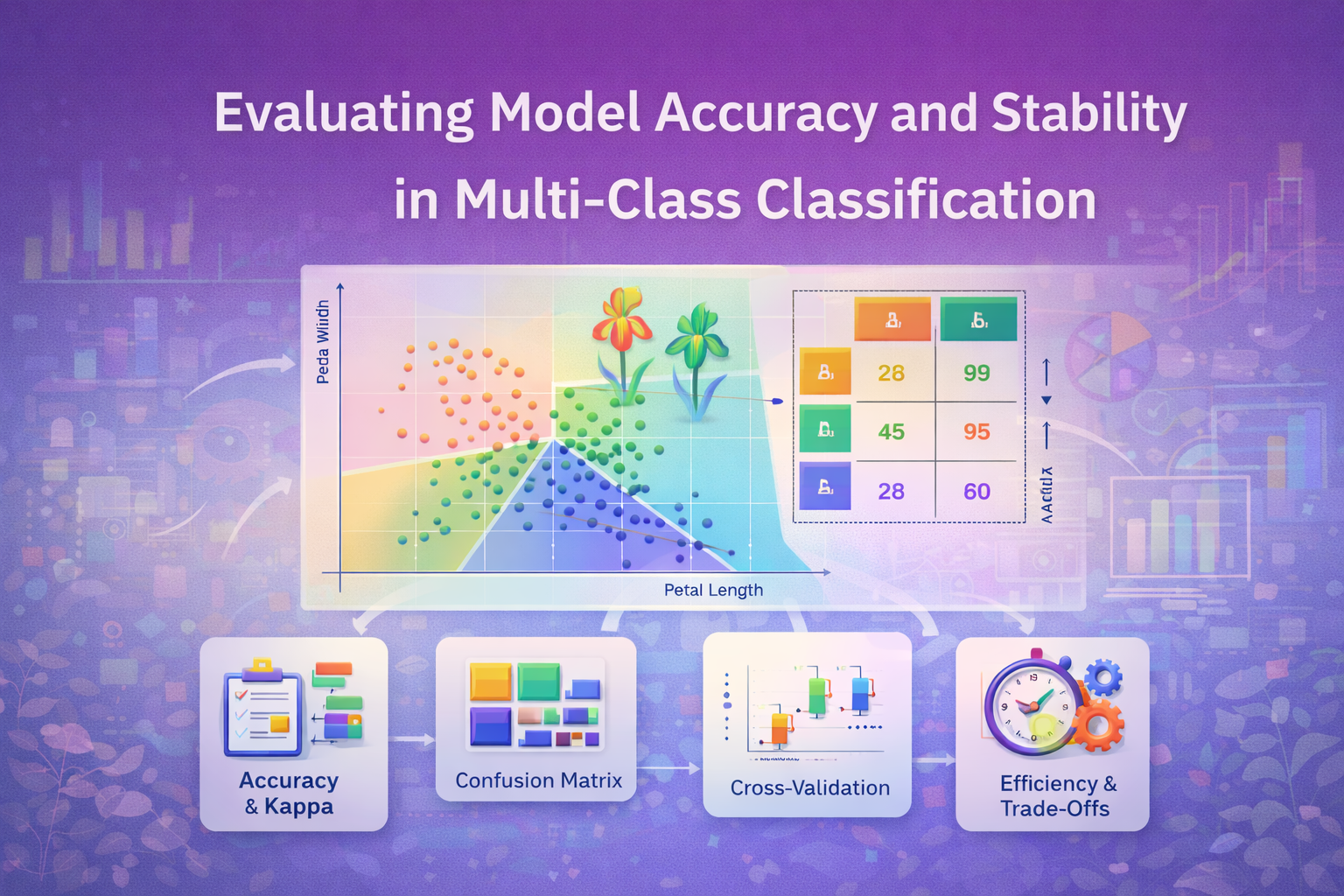

Section D: Model Evaluation

Each model was evaluated using precision, recall, F1-score, and a confusion matrix.

DummyClassifier

As expected, the baseline performed poorly, failing to recognise minority classes. This confirms the need for predictive modelling.

· Logistic Regression

This model achieved moderate performance, capturing linear trends in PHQ-9 and fatigue scores. However, misclassifications occurred mainly between Depression and Both, reflecting symptom overlap described in literature (Wotherspoon, 2021).

· Decision Tree

Although the tree captured some non-linear relationships, it demonstrated overfitting and unstable decision boundaries, a known issue in small or imbalanced clinical datasets (Vasey et al., 2021).

· Random Forest

The final model achieved the best macro-averaged metrics across all three classes.

Figure 4 Confusion matrix of Random Forest

Performance improvements included:

- Higher recall for the minority Both class

- Fewer misclassifications between Depression and ME/CFS

- Better modelling of PEM-related interactions

These results align with evidence that ensemble methods handle heterogeneous symptom patterns more effectively (Agarwal & Friedman, 2025). However, misclassification between Depression and Both still occurred, reflecting genuine clinical ambiguity.

Machine-learning evaluation in healthcare must be cautious. Systematic reviews show that clinical decision-support tools often improve accuracy only when integrated appropriately and supported by adequate training (Vasey et al., 2021). In addition, digital-health stakeholders emphasise transparency and fairness as critical requirements (Landers et al., 2023). Thus, model outputs should be advisory rather than diagnostic.

Section E: Implementation and Business Case

The Random Forest model is recommended for deployment due to its strong performance, robustness to missingness, and ability to model complex clinical patterns. Deployment should follow a structured integration approach:

· Input Schema Requirements:

All input fields used during training (e.g., fatigue severity, PHQ-9, sleep quality, PEM presence) must be included with correct formatting. As PHQ-9 and FSS have validated structures (Wang et al., 2021; Aronson et al., 2023), consistent data collection is essential.

· Operational Role:

The model should function as a triage-support tool that flags complex cases for additional assessment. It must not replace diagnostic evaluation. This aligns with evidence that decision-support systems are beneficial only when clinicians maintain final responsibility (Vasey et al., 2021).

· Monitoring and Maintenance:

Following responsible-digital-health principles, ongoing evaluation should monitor class distributions, calibration drift, and fairness outcomes (Landers et al., 2023). Retraining may be required if new patient groups or changed symptom patterns emerge.

· Interpretation and Explainability:

Feature-importance outputs can support clinician understanding of model behaviour. Given long-standing issues of mistrust among ME/CFS patients (Wotherspoon, 2021), transparent communication of model limitations is essential.

· Limitations:

The dataset is synthetic, reducing generalisability to real-world populations. Symptoms such as PEM are complex and may require more granular measurement. Clinical validation studies would be needed before service-level implementation.

References

Agarwal, P., & Friedman, K. J. (2025). Reframing myalgic encephalomyelitis/chronic fatigue syndrome (ME/CFS): biological basis of disease and recommendations for supporting patients. Healthcare, 13(15), 1917. https://doi.org/10.3390/healthcare13151917

Aronson, K. I., Martin-Schwarze, A. M., Swigris, J. J., Kolenic, G., Krishnan, J. K., Podolanczuk, A. J., … & Pulmonary Fibrosis Foundation. (2023). Validity and reliability of the fatigue severity scale in a real-world interstitial lung disease cohort. American Journal of Respiratory and Critical Care Medicine, 208(2), 188–195. https://doi.org/10.1164/rccm.202208-1504OC

Landers, C., Vayena, E., Amann, J., & Blasimme, A. (2023). Stuck in translation: Stakeholder perspectives on impediments to responsible digital health. Frontiers in Digital Health, 5, 1069410. https://doi.org/10.3389/fdgth.2023.1069410

Vasey, B., Ursprung, S., Beddoe, B., Taylor, E. H., Marlow, N., Bilbro, N., … & McCulloch, P. (2021). Association of clinician diagnostic performance with machine learning–based decision support systems: a systematic review. JAMA Network Open, 4(3), e211276. https://jamanetwork.com/journals/jamanetworkopen/fullarticle/2777403

Wang, L., Kroenke, K., Stump, T. E., & Monahan, P. O. (2021). Screening for perinatal depression with the Patient Health Questionnaire depression scale (PHQ-9): A systematic review and meta-analysis. General Hospital Psychiatry, 68, 74–82. https://doi.org/10.1016/j.genhosppsych.2020.12.007

Wotherspoon, N. (2021). Exploring the contested diagnosis of chronic fatigue syndrome/myalgic encephalomyelitis (Doctoral dissertation, University of Sheffield).

Comments