The rise of artificial intelligence in academic writing has prompted universities to reconsider how originality and authorship are assessed. Alongside traditional plagiarism checks, many institutions now experiment with AI essay detection tools designed to estimate whether a piece of writing may have been generated or heavily assisted by AI.

For students, this shift has introduced uncertainty and anxiety. Questions around reliability, false positives, and acceptable AI use are common. This article explains how AI essay detection works, what it can and cannot prove, and how students can approach academic writing responsibly in an era of automated scrutiny.

What Is AI Essay Detection?

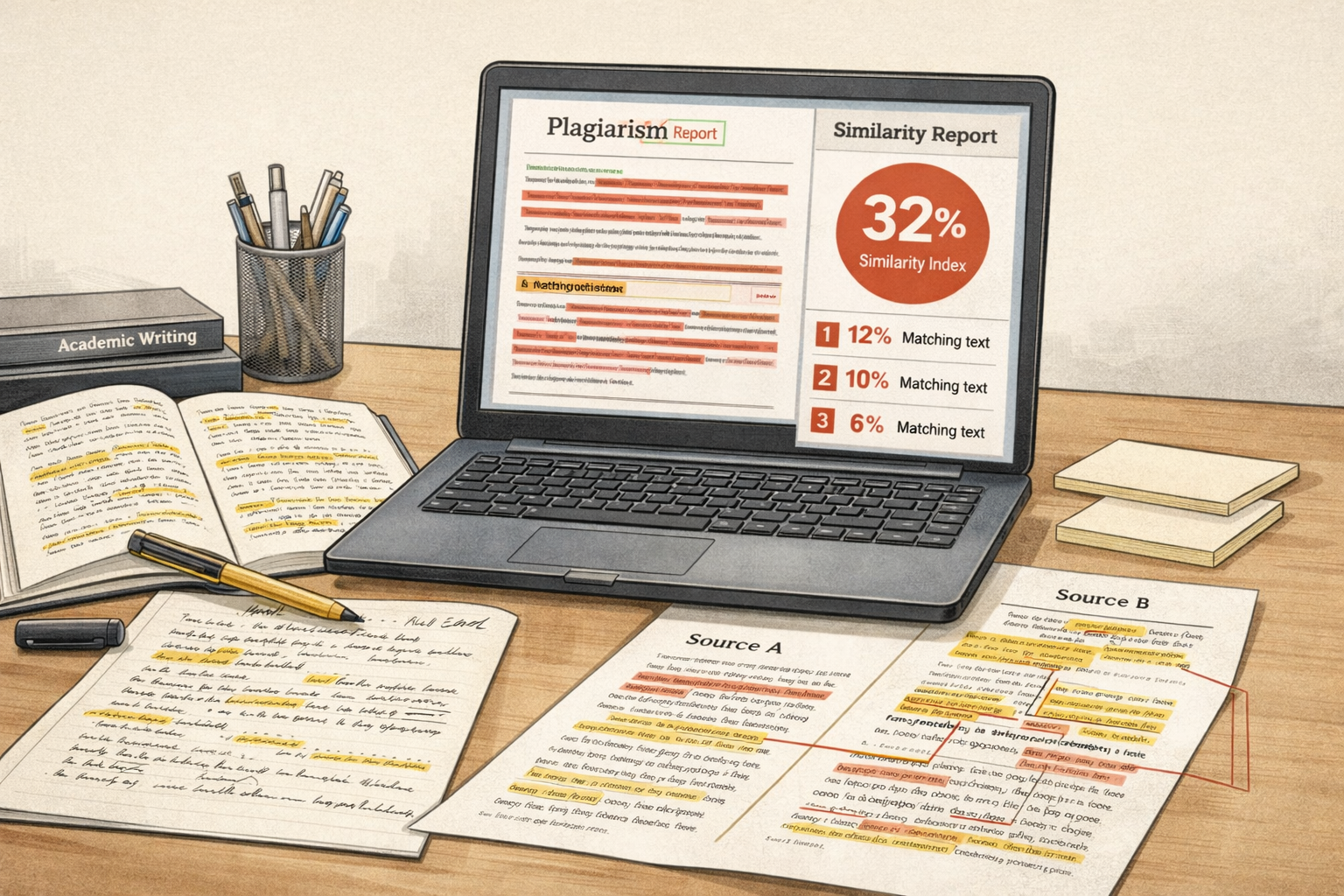

AI essay detection refers to computational methods used to analyse text and estimate whether it was produced by a human writer or generated by an artificial intelligence system. Unlike plagiarism detection, which compares text against existing sources, AI detection relies on probabilistic patterns rather than direct matches.

These tools do not identify authorship with certainty. Instead, they generate likelihood scores based on linguistic features such as sentence predictability, vocabulary distribution, and structural regularity.

Key clarification: AI detection tools provide probability estimates, not definitive proof of AI authorship.

How AI Essay Detection Tools Work

Most AI detection systems are trained on large datasets containing both human-written and AI-generated texts. From these datasets, algorithms learn statistical differences that may distinguish machine output from human writing.

Common indicators include low variability in sentence length, highly uniform grammar, and predictable word choice. However, these characteristics can also appear in polished academic writing, especially after extensive editing.

Detection Scores and Their Meaning

Detection results are typically presented as percentage likelihoods rather than categorical judgments. A high score does not confirm misconduct, just as a low score does not guarantee purely human authorship.

Responsible institutions treat these scores as indicators for further review rather than automatic evidence of wrongdoing.

Why AI Detection Is Inherently Limited

AI detection tools face structural limitations because language itself is probabilistic. Skilled human writers often produce text that appears statistically “predictable,” while AI models increasingly generate outputs that resemble natural human variation.

As AI models evolve, detection accuracy fluctuates. This creates a technological arms race where improvements in generation reduce the reliability of detection.

| Aspect | AI Essay Detection | Plagiarism Detection |

|---|---|---|

| Basis of analysis | Statistical language patterns | Textual similarity to sources |

| Certainty level | Probabilistic | Evidence-based |

| False positive risk | Relatively high | Lower when interpreted correctly |

| Primary use | Screening and review support | Source attribution |

This comparison highlights why AI detection results must be interpreted cautiously and contextually.

How Universities Actually Use AI Detection Results

Contrary to popular belief, most universities do not rely solely on AI detection software to accuse students of misconduct. Instead, results are used as part of a broader academic judgment.

Lecturers consider writing quality, alignment with course material, citation accuracy, and consistency with a student’s prior work. AI detection may trigger closer review, but it rarely serves as standalone evidence.

Human Review Remains Central

Academic staff are trained to assess reasoning depth, conceptual understanding, and disciplinary engagement. These qualities cannot be reliably measured by automated systems.

As a result, essays that demonstrate genuine engagement with sources and coherent argumentation are less likely to raise concerns, regardless of detection scores.

False Positives and Student Risk

One of the most serious issues with AI essay detection is the possibility of false positives. Highly structured, well-edited academic writing can resemble AI output, especially in technical or formal disciplines.

Students writing in a clear, neutral academic style may therefore be flagged despite producing original work. This reinforces the importance of transparency and documentation in the writing process.

Critical warning: AI detection scores should never be interpreted as proof without corroborating academic evidence.

AI Assistance Versus AI Generation

A crucial distinction exists between using AI as a support tool and submitting AI-generated text as one’s own work. Many institutions permit limited AI assistance for editing, grammar, or structural feedback.

Problems arise when AI outputs replace independent thinking, analysis, or interpretation. Students remain responsible for intellectual ownership of all submitted work.

For students unsure how to refine language while maintaining originality, structured support such as academic editing and proofreading services offers a compliant alternative.

Academic Integrity in the Age of AI Detection

AI essay detection has intensified the importance of academic integrity, but it has not redefined its core principles. Authorship, honesty, and accountability remain central.

Students who can explain their arguments, defend their methodology, and demonstrate engagement with sources are best positioned to navigate AI scrutiny confidently.

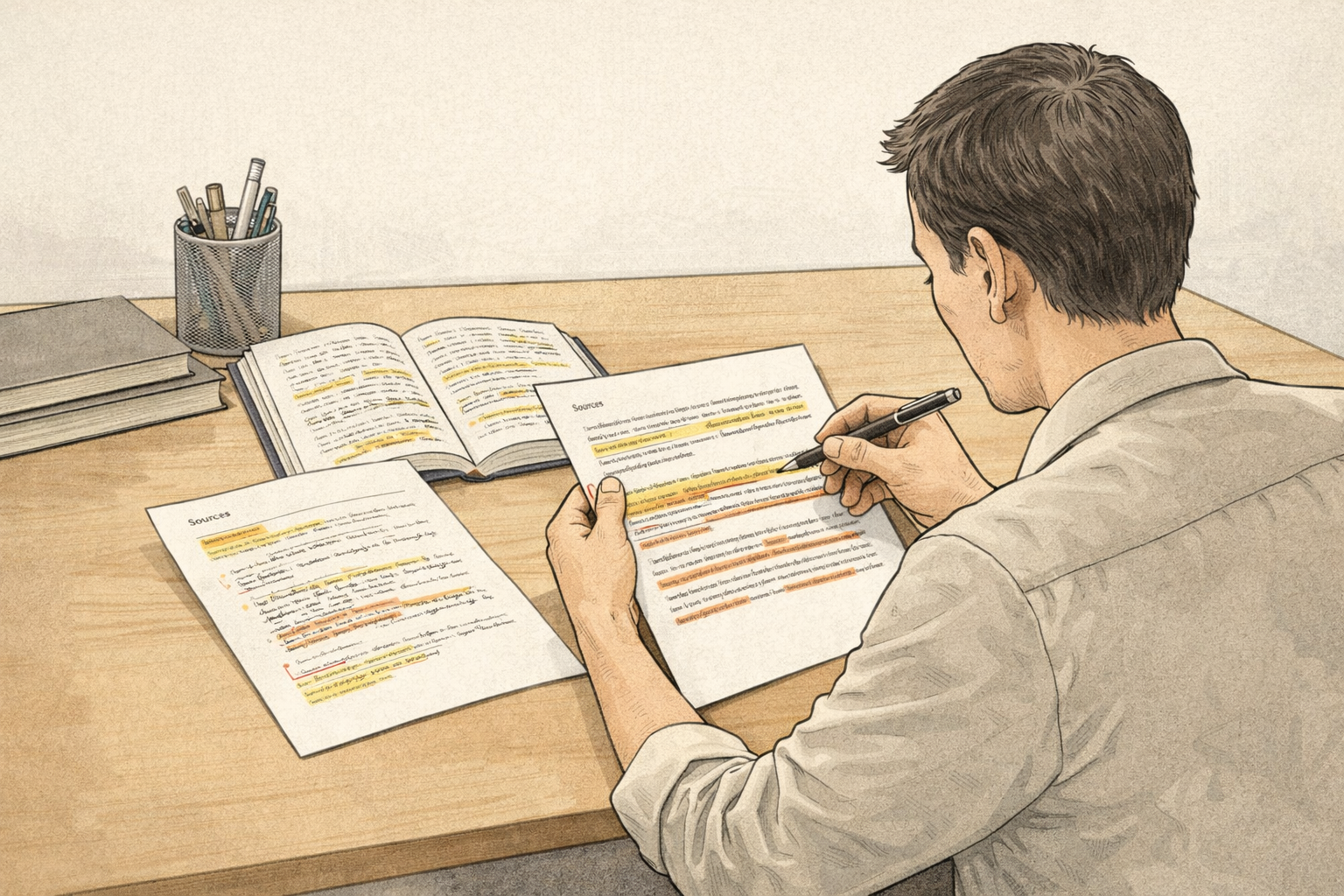

Best Practices to Avoid AI Detection Concerns

Rather than attempting to “beat” detection systems, students should focus on sound academic practices that naturally reduce risk.

- Draft arguments independently before using any digital tools

- Engage critically with academic sources and cite them accurately

- Revise writing manually to reflect personal voice and reasoning

- Retain drafts and notes to document the writing process

These practices strengthen learning outcomes while aligning with institutional expectations.

AI Detection and Dissertations or Major Projects

Long-form academic work such as dissertations attracts heightened scrutiny due to its independent research requirements. AI detection tools may be used, but human evaluation dominates assessment.

Students undertaking substantial projects benefit from structured guidance that prioritises originality and methodological clarity, such as dissertation writing support.

What the Future Holds for AI Essay Detection

As AI models improve, detection accuracy will continue to fluctuate. Many academic experts argue that over-reliance on detection tools is unsustainable in the long term.

Instead, universities are increasingly emphasising assessment design, reflective components, and oral defences that prioritise understanding over textual patterns.

Final Guidance on AI Essay Detection

AI essay detection tools are best understood as screening mechanisms, not verdict engines. They highlight potential concerns but cannot replace academic judgment.

Students who focus on genuine engagement, transparent writing practices, and ethical use of technology can approach AI detection with confidence rather than fear.

Comments