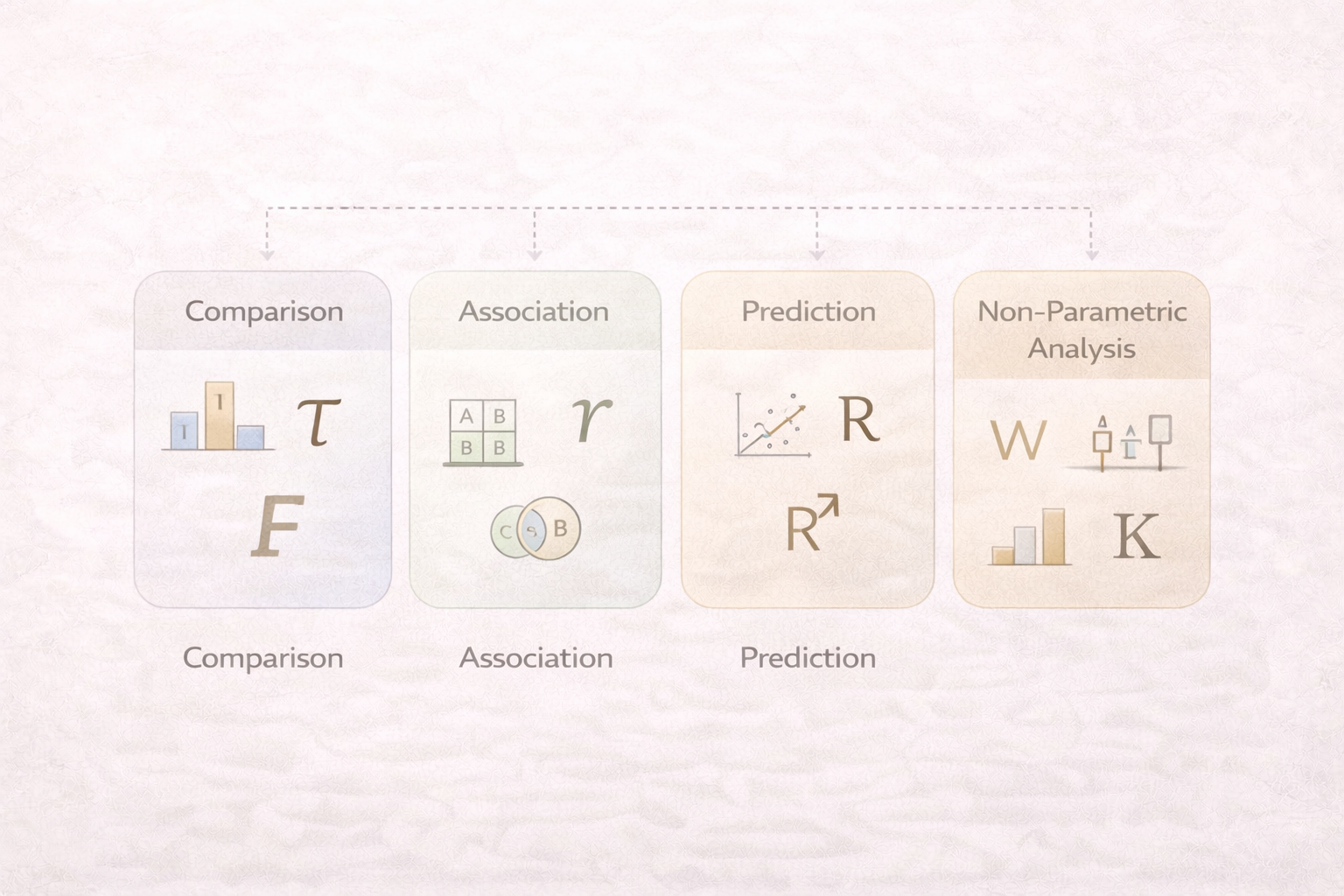

Understanding statistical tests is not about memorising formulas or software commands; it is about making defensible analytical decisions. Many students struggle with statistics not because the calculations are difficult, but because they are unsure which test to use and why. Examiners and reviewers consistently penalise projects that apply inappropriate tests, violate assumptions, or fail to justify analytical choices. The table shown above captures the most commonly used statistical tests in university research, but correct usage requires deeper conceptual understanding.

This guide explains the logic behind major statistical tests, what each test is designed to answer, the assumptions that must be met, and the types of research questions each test supports. It is written for undergraduate, postgraduate, and early-stage research students who want to move beyond guesswork and demonstrate methodological competence. Where helpful, this article links to Epic Essay resources that strengthen research design and methodological justification.

Why choosing the correct statistical test matters academically

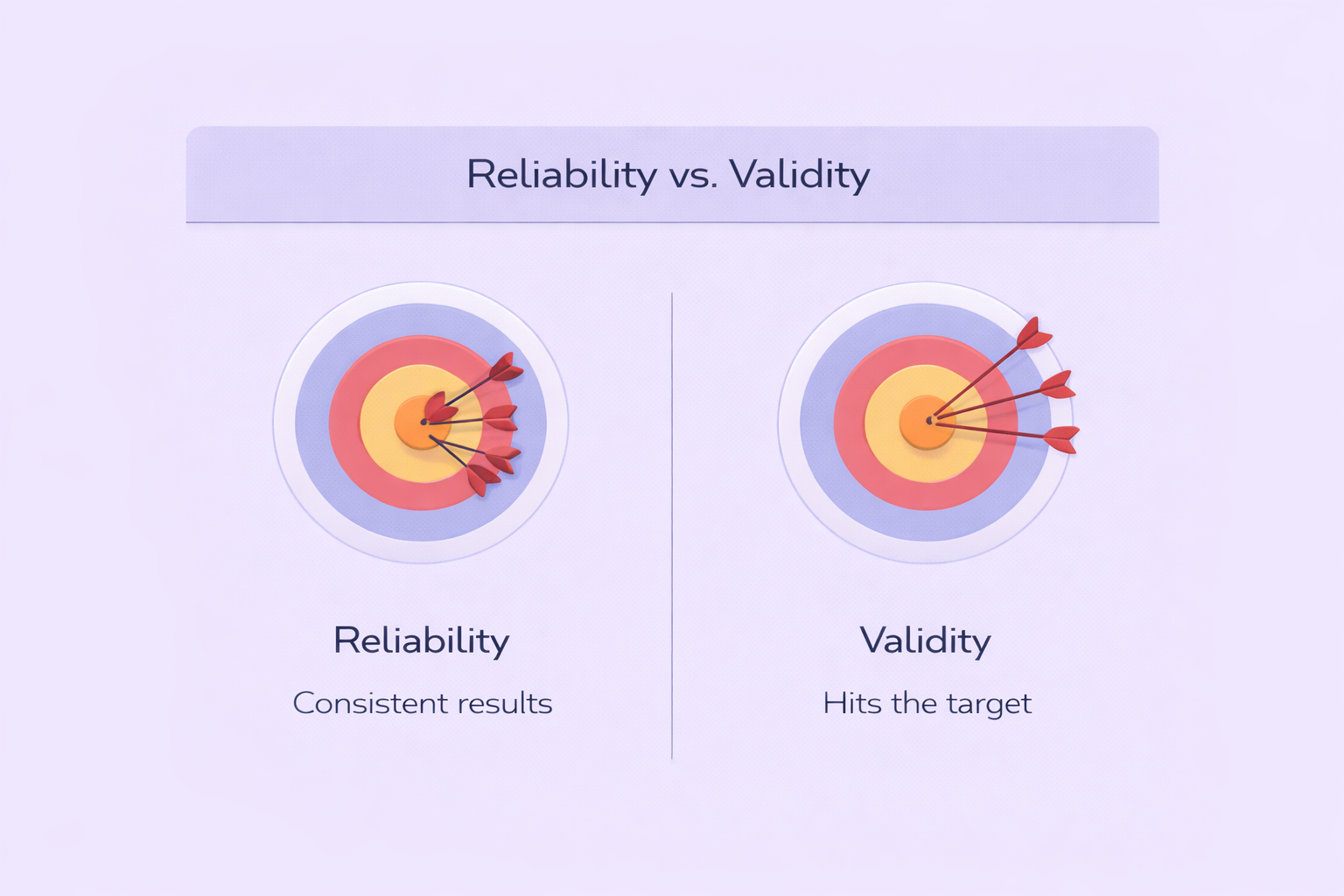

Statistical tests are not interchangeable tools. Each test is designed to answer a specific type of research question under specific conditions. When a test is misapplied, the results may appear numerical and “scientific” but are methodologically invalid. Examiners and peer reviewers are trained to detect these mismatches quickly, especially when assumptions such as normality, independence, or equal variance are ignored.

At university level, statistical analysis is assessed not only on output, but on reasoning. You are expected to explain why a particular test is appropriate given your data type, research design, and hypotheses. This is why statistics is inseparable from research methodology. If you need clarity on how analytical choices fit within broader research design, this guide on methods versus methodology provides essential context.

Using a statistical test without checking its assumptions undermines the credibility of the entire study.

Key factors that determine which statistical test to use

Before selecting a statistical test, researchers must evaluate several foundational factors. The first is the type of variables involved—whether they are categorical, ordinal, or continuous. The second is the number of groups or variables being compared. The third is the study design, such as whether observations are independent or paired. Finally, researchers must assess whether key assumptions, such as normal distribution or equal variance, are satisfied.

Students often select tests based on surface cues (for example, “I am comparing two groups, so I use a t-test”) without checking whether those groups are independent, whether the data are normally distributed, or whether a non-parametric alternative is more appropriate. Strong research work shows awareness of these decision points and explicitly justifies the final choice of test.

t-Test: comparing means between two groups

The t-test is one of the most widely used statistical tests in academic research. It is designed to compare the means of two groups to determine whether any observed difference is statistically significant. Common applications include comparing test scores between two teaching methods or measuring differences between treatment and control groups.

However, the t-test relies on important assumptions: the data should be approximately normally distributed, the groups should have equal variances, and observations should be independent. When these assumptions are violated, results can be misleading. Students frequently lose marks for using a t-test when data are skewed or when sample sizes are very small without justification.

When normality is questionable, non-parametric alternatives such as the Mann–Whitney U test may be more appropriate. The key academic skill is not knowing every test, but knowing when a commonly used test becomes inappropriate.

ANOVA: comparing means across more than two groups

Analysis of Variance (ANOVA) extends the logic of the t-test to situations involving more than two groups. It allows researchers to test whether at least one group mean differs significantly from others without inflating error rates through multiple t-tests. ANOVA is commonly used in education, psychology, and experimental sciences.

Like the t-test, ANOVA assumes normally distributed data, homogeneity of variance, and independent observations. A frequent student error is running ANOVA correctly but failing to conduct or interpret post-hoc tests, which are necessary to identify where group differences actually occur. Examiners expect students to demonstrate awareness of this analytical sequence.

When assumptions are violated, the Kruskal–Wallis test provides a non-parametric alternative. Justifying this switch shows strong methodological awareness.

Chi-Square Test: relationships between categorical variables

The Chi-Square test is used to examine whether there is an association between two categorical variables. Typical examples include analysing relationships between gender and preference, education level and employment status, or treatment group and outcome category.

This test does not compare means and is not suitable for continuous data. Its key assumptions include a sufficiently large sample size and expected frequencies that are not too small. A common mistake is applying Chi-Square to small samples where expected counts fall below acceptable thresholds, which invalidates the test.

When sample sizes are small, Fisher’s Exact Test is the preferred alternative. Recognising when to use Fisher’s test rather than Chi-Square demonstrates analytical maturity.

Pearson Correlation: measuring linear relationships

Pearson correlation measures the strength and direction of a linear relationship between two continuous variables. It is frequently used in social sciences to explore associations such as age and income, study time and grades, or stress and performance.

Correlation does not imply causation, yet students frequently overinterpret correlation coefficients as evidence of causal relationships. Examiners and reviewers penalise this heavily. Pearson correlation also assumes linearity, normality, and homoscedasticity—conditions that are often ignored in student projects.

If relationships are non-linear or data are ordinal, alternatives such as Spearman’s rank correlation are more appropriate. For guidance on avoiding causal overclaims, this causal inference guide is highly recommended.

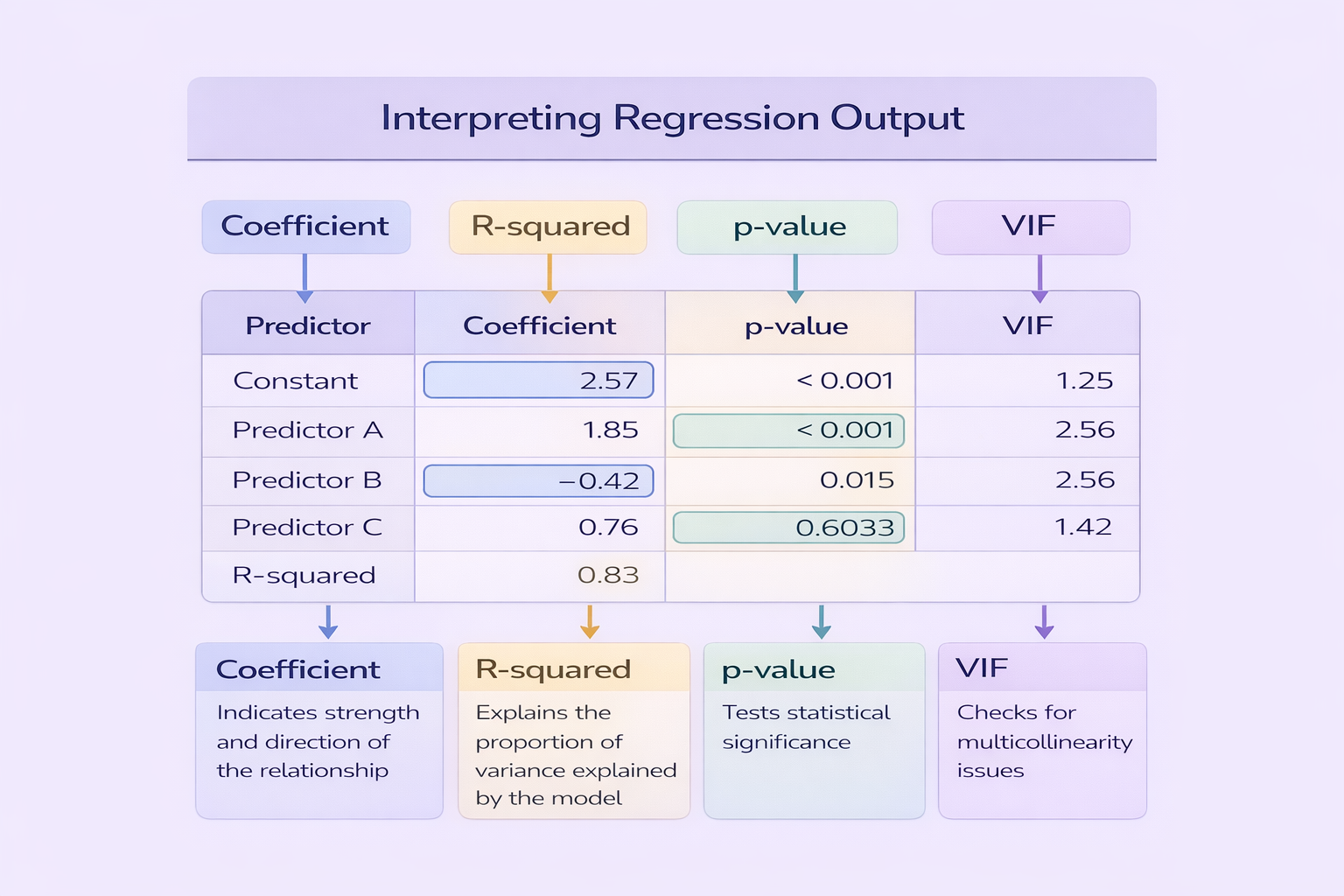

Regression analysis: predicting outcomes

Regression analysis allows researchers to examine how one or more independent variables predict a continuous dependent variable. It is a powerful tool widely used in economics, health sciences, education, and business research.

Regression requires careful interpretation. Assumptions such as linearity, independence of errors, and homoscedasticity must be checked and reported. Students often focus on coefficients and p-values while ignoring diagnostic checks, which weakens credibility. Regression is also frequently misused to imply causation without appropriate design.

Strong academic work explains what regression can and cannot show, links findings back to theory, and discusses limitations transparently.

Non-parametric tests: when assumptions are violated

Non-parametric tests provide robust alternatives when data do not meet parametric assumptions. The Mann–Whitney U test compares distributions between two independent groups, while the Kruskal–Wallis test extends this logic to more than two groups.

The Wilcoxon Signed-Rank test is used for paired or related samples, such as pre-test and post-test designs. These tests are especially valuable in small-sample studies or when data are ordinal rather than continuous.

Students sometimes believe non-parametric tests are “inferior.” In reality, choosing a non-parametric test when appropriate demonstrates strong methodological judgement. Examiners reward correct reasoning over unnecessary complexity.

McNemar’s Test and Fisher’s Exact Test: specialised categorical analyses

McNemar’s Test is designed for paired categorical data, such as before-and-after studies with binary outcomes. It is commonly misused when independence assumptions are violated, making it a critical test for repeated-measures designs.

Fisher’s Exact Test is used for small sample sizes in 2×2 contingency tables. Unlike Chi-Square, it does not rely on large-sample approximations, making it ideal for pilot studies or niche research contexts.

Correct application of these specialised tests signals advanced statistical literacy, especially in dissertations and publishable research.

How examiners and supervisors evaluate statistical test selection

Examiners do not expect perfection, but they do expect justification. Marks are awarded for explaining why a test was chosen, acknowledging assumptions, and discussing limitations. Statistical analysis that is technically simple but well justified often scores higher than complex analysis applied incorrectly.

Supervisors and reviewers look for alignment between research questions, data, and analysis. If this alignment is unclear, they may question the validity of the entire study. Using structured planning tools such as this academic checklist can help ensure analytical coherence.

| Error | Why it matters | Academic consequence |

|---|---|---|

| Ignoring test assumptions | Violates validity of results | Loss of marks or reviewer rejection |

| Using parametric tests on non-normal data | Inflates Type I or II errors | Methodology criticised as flawed |

| Interpreting correlation as causation | Misrepresents findings | Serious conceptual error |

| Failing to justify test choice | Lack of methodological reasoning | Lower methodology scores |

Table 1 highlights that statistical mistakes are not minor technicalities—they directly affect academic evaluation.

Developing confidence in statistical decision-making

Confidence in statistics comes from understanding purpose, not memorisation. When you can explain what question a test answers, what assumptions it makes, and what its results mean, you move from mechanical analysis to scholarly reasoning.

If you are working on a dissertation or research project and need structured support with statistical planning, interpretation, or academic presentation, Dissertations and Research Papers support and Academic Editing and Proofreading can help ensure methodological clarity without compromising academic integrity.

Choosing the right statistical test with academic confidence

The correct statistical test is the one that matches your research question, data type, and assumptions—not the one that looks most advanced. By grounding your analysis in clear reasoning and transparent justification, you demonstrate academic competence and protect your research from avoidable criticism.

Approach statistics as a decision-making process rather than a formula exercise. When you do, statistical analysis becomes a strength rather than a source of anxiety.

Comments