Learning how to review a research paper is one of the fastest ways to improve your own academic writing. Whether you are writing a dissertation, preparing a journal-style critique, or completing a peer review exercise in class, the goal is not to “find flaws” for their own sake. The goal is to evaluate whether a paper makes a meaningful contribution, uses defensible methods, reports evidence transparently, and communicates findings in a form other researchers can assess and build on. That is why a structured approach matters: it prevents you from over-focusing on grammar while missing deeper issues such as weak research design or unsupported claims.

The image-based framework above reduces peer review to five questions: Significance, Novelty, Methodology, Verifiability, and Presentation. This article expands that checklist into an academically rigorous reviewing method that aligns with common university marking criteria (critical evaluation, methodological awareness, evidence-based judgement, and academic writing conventions). Where helpful, you can deepen related research skills using Epic Essay guides on what makes a research question publishable, methods vs methodology, and causal inference.

What counts as a “good” research paper review at university level

A high-quality review demonstrates three competencies at the same time. First, it shows comprehension: you can accurately state what the paper claims, what evidence it uses, and what it concludes. Second, it shows evaluation: you can judge whether the design and reasoning justify the claims. Third, it shows scholarly positioning: you can explain why the paper matters in a field, how it relates to existing literature, and what limitations affect interpretation. Many students accidentally write “summaries disguised as reviews,” which often score poorly because they describe the paper without evaluating it.

Good reviews are also disciplined in tone. Review writing is not a debate performance; it is an evidence-based assessment. That means you should separate “major issues” (those that affect validity or contribution) from “minor issues” (those that improve clarity but do not change the results). If you need a structured approach to final checks and quality control for your own writing, the logic in Essay Writing Checklist for Academic Success transfers well to reviewing because it trains you to distinguish structure, evidence, and presentation.

A strong review makes claims about the paper only when those claims are supported by what the paper actually shows, reports, or justifies.

The 5-question review framework: how to use it without being superficial

The five questions work best when you treat them as five dimensions of academic credibility, not as five bullet points. Each dimension asks you to check alignment: alignment between the research problem and its value (significance), between the claimed contribution and existing work (novelty), between the question and the method (methodology), between the claims and the transparency of evidence (verifiability), and between the content and the way it is communicated (presentation). If one alignment fails, the paper may still be interesting, but it becomes less defensible academically.

The table below provides an examiner-friendly way to apply the framework consistently. You can use it to write a peer review, prepare a journal club critique, or structure a “critical evaluation” section in an assignment.

| Review question | What you should look for in the paper | Common red flags that weaken credibility |

|---|---|---|

| Significance | A clear problem statement, a justified audience or stakeholder, and a rationale showing why the work matters academically or practically | Vague importance claims, unclear target user, or a topic that is described as “important” without evidence of an actual gap or consequence |

| Novelty | A specific contribution compared to recent literature, with a clearly stated gap and an explanation of what this study adds | Novelty claims that are asserted rather than demonstrated, or a “gap” that is simply a lack of local studies without conceptual justification |

| Methodology | Design choices that logically answer the research question, justified sampling and measurement, and claims that match the method’s limits | Methods that are under-described, a mismatch between question and design, or causal language unsupported by the study design |

| Verifiability | Transparent reporting, clear analytic steps, and enough detail for another researcher to understand or replicate the work | Missing data-handling detail, unclear analysis pipeline, selective reporting, or results presented without sufficient supporting evidence |

| Presentation | Coherent structure, accurate tables and figures, consistent terminology, and clear writing that does not distort meaning | Disorganised sections, confusing tables, inconsistent terms, or language that makes interpretation ambiguous or unreliable |

Table 1 also helps you write a stronger review narrative: you are not listing opinions, you are diagnosing alignment problems that affect academic quality.

1) Significance: Is this research important in a defensible way?

Significance is not the same as popularity. A paper is significant when it addresses a problem that matters for a field’s theory, method, or practice, and when it justifies that importance with scholarly reasoning. In a good paper, significance appears early and clearly: the introduction frames a research problem, explains consequences of not addressing it, and identifies who benefits from the work (researchers, practitioners, policymakers, learners, patients, or institutions). As a reviewer, you should ask whether the paper’s “importance” is argued or merely declared.

Students often review significance superficially by writing “this is important” without specifying why. A more academic approach is to identify the paper’s implied value claim and test it. For example, if a paper claims to improve educational outcomes, does it define which outcomes and for whom? If it claims to address a policy issue, does it explain the mechanism by which evidence informs policy? If your review needs stronger introduction logic—especially around narrowing from broad context to specific problem—use How to Start an Essay Effectively as a model for disciplined academic framing.

Finally, significance is often tied to scope. A small study can be significant if it answers a well-defined question with careful reasoning. A large study can be insignificant if it asks a vague question and produces predictable findings. Your role is to judge the match between the paper’s aims and the value it claims to produce.

2) Novelty: Is the contribution genuinely new, or only rephrased?

Novelty is one of the most misunderstood parts of peer review. Editors and lecturers rarely expect “never-before-seen” topics. They expect a paper to add something: a new dataset, a new comparison, a stronger method, a theoretical refinement, a replication that changes confidence, or a context that challenges generalisability. Reviewing novelty means checking whether the paper demonstrates awareness of recent literature and positions its contribution precisely against what already exists.

The most common failure is “novelty by assertion.” Papers sometimes claim to be the first study of something, but do not show a careful search or a meaningful gap. As a reviewer, you can test novelty by asking whether the literature review builds logically to a specific research gap. If the gap is weak, the entire study can become a solution in search of a problem. For a structured way to evaluate novelty and gap quality, use What Makes a Research Question Publishable? as a benchmark, even if your task is only a class critique.

Novelty is credible when it is demonstrated through positioning in current literature, not when it is claimed as a slogan.

In your written review, avoid vague statements like “the novelty is unclear.” Instead, specify what the paper claims is new, identify what prior work seems close, and explain what additional positioning would be needed to establish the contribution convincingly.

3) Methodology: Is the study executed correctly for the question it asks?

Methodology is where many papers become vulnerable, because it determines what the results can legitimately mean. Reviewing methodology requires you to evaluate fit: does the design answer the research question? Are sampling and measurement choices justified? Are analytic decisions transparent and appropriate? At university level, the strongest reviews show awareness that different questions require different methods, and that “good methods” are not universal—they are appropriate to purpose.

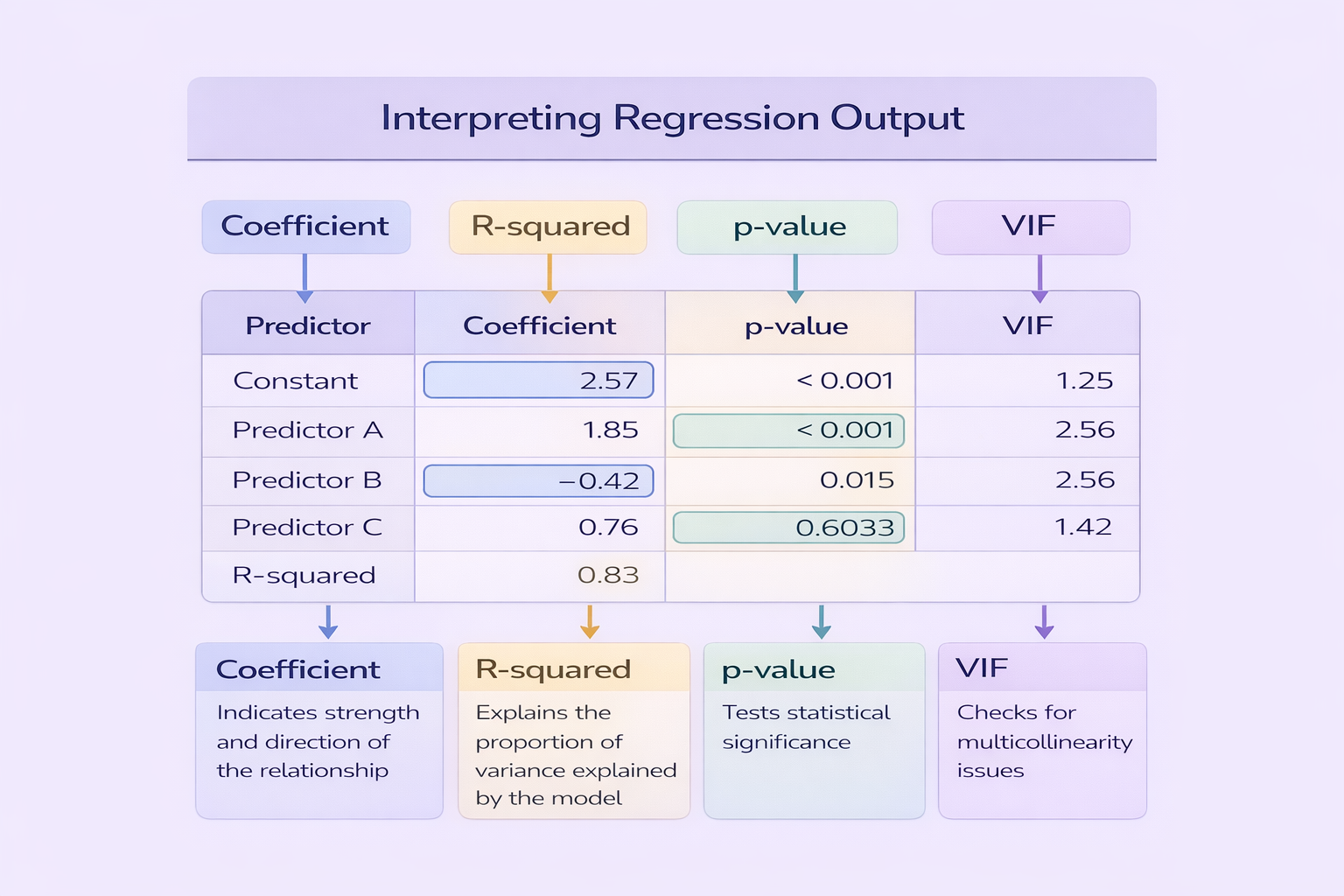

A reliable starting point is to separate methods from methodology. Methods are the procedures (sampling, instruments, coding, statistical tests). Methodology is the reasoning that justifies those procedures and explains their limits. If the paper describes what it did but never justifies why, reviewers should flag this as a credibility risk. For students, the guide on research methods vs research methodology helps you write method critiques with correct academic language.

Another frequent methodological issue is overclaiming causality. If a paper uses observational data, cross-sectional surveys, or correlational designs, causal verbs are often unjustified unless the design includes credible causal identification. Reviewers should not merely say “causality is wrong”; they should explain which design elements are missing (randomisation, credible counterfactual, confound control strategy, temporal ordering) and how that limits conclusions. If you want a clear way to evaluate causal language, consult Understanding Causal Inference and apply its logic to the manuscript’s claims.

4) Verifiability: Can another researcher audit or replicate what was done?

Verifiability is the bridge between research as private work and research as public knowledge. A paper is verifiable when it provides enough information for others to understand how findings were produced and to evaluate whether conclusions follow from evidence. In quantitative work, this often includes clarity on data sources, variable definitions, exclusion criteria, statistical models, and robustness checks. In qualitative work, it includes transparency about sampling, analytic steps, coding decisions, and how interpretations were tested against the dataset.

Students sometimes think verifiability is only relevant for lab sciences, but it matters across fields because it protects against “results by narration.” If you cannot see the analytic pathway, you cannot evaluate the claim. In your review, look for concrete reporting: What data were used? What steps were taken? What decisions were made when data were messy or ambiguous? Are limitations acknowledged honestly? If the paper relies on appendices for essential detail, ensure those appendices are referenced and structured correctly; Report With Appendices provides a useful standard for what well-managed supplementary material looks like.

For class reviews, you do not need to demand open datasets, but you should evaluate whether the paper is written in a way that makes critical evaluation possible. Verifiability is one of the strongest indicators that a manuscript is “peer-review ready” rather than merely persuasive.

5) Presentation: Is the research communicated accurately and professionally?

Presentation is not just “writing quality.” It is whether the structure, tables, figures, and language allow a reader to interpret the evidence correctly. A paper can contain good research but still fail to communicate it in a scholarly way. Reviewers should check whether the abstract matches the paper, whether the introduction signals a clear roadmap, whether results are presented before interpretation (where appropriate), and whether the discussion stays proportional to evidence.

Presentation problems also include misleading visuals, tables that omit essential context, inconsistent terminology, and poor signposting that forces readers to guess what each section is doing. Many student manuscripts suffer from structural drift: the paper begins as one question and ends as another. If you need a model for disciplined structure and coherent progression, Essay Structure Explained for University Students is a practical guide that translates well to research papers.

Language clarity matters most when ambiguity changes meaning. Reviewers should distinguish minor grammar issues from clarity failures that distort claims. For high-stakes submissions, formal academic editing can be appropriate—not to change ideas, but to ensure the writing communicates the research precisely. If you need professional polishing support, Essay Editing Services is relevant when the goal is clarity, consistency, and formatting readiness.

How to turn your review into a clear recommendation

Most peer review tasks require some form of judgement: accept, minor revision, major revision, or reject (or, in coursework terms, “strong,” “adequate,” “weak”). The most academic way to make that judgement is to connect it to the five questions. A paper with strong significance and novelty but major methodological flaws is not “almost ready”; it is major revision because validity is at stake. A paper with strong methods and results but weak presentation may be minor revision because clarity is fixable without changing the underlying study.

The table below offers a disciplined decision tool you can use in assignments and peer review exercises. It avoids the common student mistake of basing the final judgement purely on writing style.

| Recommendation | Typical profile across the five questions | What your review should prioritise |

|---|---|---|

| Accept / Strong paper | Significance and novelty are clearly demonstrated; methodology fits the question; reporting is transparent; presentation supports accurate interpretation | Highlight the contribution precisely, note minor improvements, and confirm that claims match evidence |

| Minor revision | Core contribution and method are sound, but clarity, structure, referencing consistency, or figure/table presentation needs improvement | Provide specific presentation and clarity fixes, focusing on sections where ambiguity affects interpretation |

| Major revision | Potential contribution exists, but there are serious issues in novelty positioning, methodological justification, or reporting transparency | Identify the few high-impact problems that must be solved for credibility, and explain why they matter academically |

| Reject / Fundamentally weak | Significance is unclear, novelty is not established, and methodology or reporting problems undermine validity | Explain the core misalignments and what would need to change for a future study, not just superficial edits |

Table 2 also helps you write efficiently: you do not need to list every minor error if the core methodological fit fails. Good reviews prioritise what affects scholarly validity and contribution.

How to review a research paper confidently using five questions

The five-question framework works because it mirrors how academic knowledge is judged: value, contribution, credibility, transparency, and communication. If you apply it carefully, your review becomes structured and persuasive without becoming hostile or vague. Start by summarising the paper accurately in two to four sentences, then evaluate each question with evidence from the manuscript, and end with a recommendation justified by the highest-impact issues.

Most importantly, remember that reviewing is a transferable skill. When you learn to spot unclear questions, weak method justification, unsupported claims, and reporting gaps in other people’s work, you become much less likely to make those mistakes in your own. That is why peer review is one of the most practical academic training tools available to students at any level.

Comments